Humans are bound to perform certain activities like walking, sleeping, running, sitting, etc. in their daily life. What if I say, humans can now perform as well as keep a track of what activities they are performing in real-time!

Interesting??

Well, in this article I will show how to build your own Human Activity Recognition App that detects 6 activities namely walking, walking_downstairs, walking_upstairs, laying, standing, and sitting using deepC and cAInvas.

Getting Started

In this notebook, the human activity recognition dataset having records of acceleration and angular velocity measurements from different physical aspects in all three spatial dimensions (X, Y, Z) is used to train a machine and predict the activity from one of the six activities performed.

The train dataset contains 7352 records and 563 variables, whereas the test dataset has 2947 records and 563 variables.

To start with, let’s do some exploratory analysis in hope of understanding various measures and their effect on the activities.

Exploratory Analysis

The Dataset to be used is available here!

The data has 7352 observations with 563 variables with the first few columns representing the mean and standard deviations of body accelerations in 3 spatial dimensions (X, Y, Z). The last two columns are “subject” and “Activity” which represent the subject that the observation is taken from and the corresponding activity respectively. Let’s see what activities have been recorded in this data.

Each observation in the dataset represents one of the six activities whose features are recorded in the 561 variables. Our goal would be to train a machine to predict one of the six activities given a feature set of these 561 variables.

Building the model

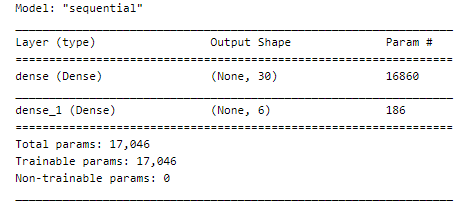

We have used two fully connected layers with relu activation and softmax activation respectively, of which one layer consists of 30 neurons, whereas the other one has 6 neurons only.

Testing the model

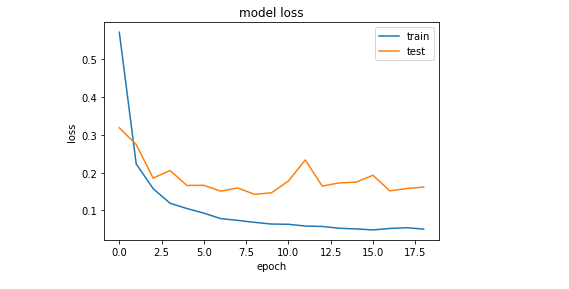

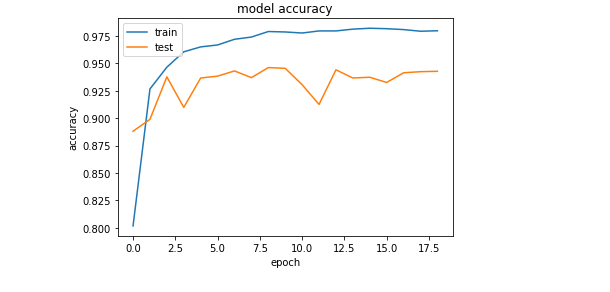

At this stage, we would train the Neural network for 25 Epochs with early stopping, monitoring the validation loss with the patience of 10.

The loss function specially used in this model is ‘categorical_crossentropy’ with an Adam optimizer having a learning rate = 0.001

Finally, the validation accuracy obtained after testing our model is 94.27% approx.

Loss graph of the model

Accuracy graph of the model

Compiling with deepC:

To bring the saved model on MCU, install deepC — an open-source, vendor-independent deep learning library cum compiler and inference framework, for microcomputers and micro-controllers.

Here’s the link to the complete notebook: https://cainvas.ai-tech.systems/use-cases/human-activity-recognition-app/

Credits: Pradeep Babburi

Written by: Sanlap Dutta

Also Read: Keras CIFAR-10 Vision App for Image Classification using Tensorflow