Mute and deaf people communicate entirely with the American Sign Language (ASL) in the United States and Canada. Originating in the 19th century, this language system has evolved significantly over time, relying mostly on hand movements.

However, the knowledge of sign language is usually limited to the deaf community and their closed circle.

Recent progress in IoT sensing and tinyML devices aims to bridge the gap by translating sign language to spoken language. Complex neural network sequences, when trained with an adequate amount of sensor data, could easily detect phonemic patterns of ASL.

As the world moves towards processing from the Edge, new innovative methods of communication come into the picture.

Wearable ASL gloves are one of the classic examples, and they are intended to serve the deaf/mute community. Using this device, around 70 million deaf/mute people will be powered to communicate efficiently with people who don’t understand the sign language.

In this article, we present an end-to-end solution to develop a wearable glove technology, that can convert sign gestures to spoken words and sentences.

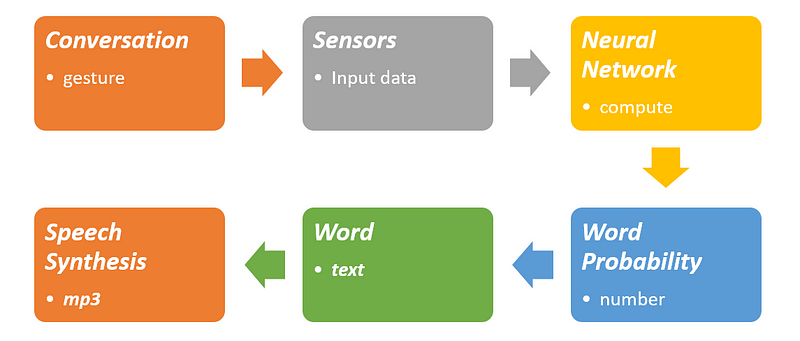

These gloves have embedded sensors within tiny devices like a Microcontroller (MCU) with an ARM Cortex™-M4 processor. Data is captured using a finger-mounted accelerometer and a gyroscope and then processed in a neural network on MCUs, to decode the sign into its corresponding spoken word. The words are then speech synthesized in real-time from these captured gestures.

Keeping these elements in mind, we will train a neural network to classify ASL gestures, and subsequently test its performance on our native platform- cAInvas.

cAInvas is a developer platform that comes in handy to a wide range of professions, from data scientists to embedded developers.

Sample Graph Data:

Installing deepC:

To bring the saved model on MCU, install deepC — an open-source, vendor-independent deep learning library cum compiler and inference framework, for microcomputers and micro-controllers.

Compiling Model for Cortex-M4:

Generating C++ Code:

If you wish to edit the CPP file, there is a feature on cAInvas to convert it into an IPYNB file and implement the necessary changes.

cAInvas helps simplify AI development for the EDGE devices tremendously. It boasts support for various ML models derived from all popular platforms such as TensorFlow, Keras, and PyTorch.

Best of all, it is FREE for all students, researchers, hobbyists and individual programmers to help spread quicker adoption of ML models for TinyML devices!

Here’s the link to the complete notebook: ASL Recognition using cAInvas

You may also be interested in ·

- Learning more about Hate Speech and Offensive Language Detection

- Reading about Fingerprint pattern classification using Deep Learning

- Also Read: Identify hummingbird species — on cAInvas

- Finding out about Deep Learning, the technology enabling machines to interpret complex data and mimic human cognition

Become a Contributor: Write for AITS Publication Today! We’ll be happy to publish your latest article on data science, artificial intelligence, machine learning, deep learning, and other technology topics