deepSea

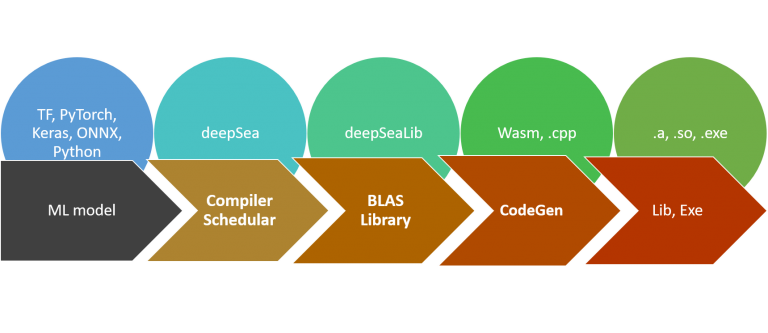

deepSea is vendor independent deep learning library, compiler and inference framework targeted for edge, IoT and and microcontrollers devices.

deepSea compiles ML models from popular frameworks including TensorFlow, Keras, PyTorch, Caffee and ONNX to variety of bare metal and RTOS based MCUs. It supports 125+ operators across vision, audio and sensor based applications. Smart applications produced by deepSea have been tested on ARM Cortex, Xtensa and other x86 targets on our cAInvas platform.

Edge compiler deepSea enables developers to run ML models on edge devices including micro-controllers. With compute, memory and storage being scarce resources on Edge devices, deepSea allows ML models to be deployed on these Edge devices with up to 50% less RAM usage, up to 60% less flash size footprint, 10-30% less compute cycles compared to TFLite Micro models for Microcontrollers high degree of correlation to 3rd decimal place.

deepSea is built on a unique modular architecture dedicated to microcontroller that achieves these optimizations by converting the models to high level compute graph supported by BLAS library of operators. It has ability to produce intermediate code gen in embedded C/C++, web-assembly and other high level languages. By default, it produces a cross compiled binary for underlying hardware board.

If your organization provides EDGE accelerator IP, board or a complete solution, NN layer scheduler plays a crucial role in bringing out performance and power advantages of your product. deepSea is the industry leading compiler to optimize layer scheduling to custom and brand new hardware devices.

Testimonials

“Great product, great technology.”

– Dr. John C., NASA

“This project provides game changing technology.”

– Robert E., Ph.D

“We are excited to work with AITS to develop low cost and real time solutions for multimodal sensor fusion”

– Prof Jia L.

“We are confident that AI-Tech Systems will be successful in expanding the development and capabilities of tinyML for space weather applications.”

– Dr. Linda H, NASA

“They (AITS) appear well-positioned to build success by applying their experiences, skill and innovative ideas.”

– Dr. Chris R, VP Cisco, IEEE fellow

“We’re keen on using the AITS platform & expertise.”

– Dr. Aparna A, CSIR

“The tool chain you have is pretty attractive for an embedded developer.”

– Shridhar C, MCU Vendor