Recognize common gestures using the accelerometer and gyroscope sensors and use the same in implementing a home automation system.

TinyML – A gesture is the movement of the hand to express an idea or meaning. There are various ways of reading a gesture.

The aim of this notebook was not only to develop a gesture recognition system but to experiment with its implementation in a home automation system. With this in mind, several techniques were discussed as below —

- Hand pose estimation using cameras — While pose estimation is a promising technique, it would be inconvenient to position oneself in the camera’s field of view each time for performing gestures. An alternate would be to place cameras that cover every angle of the house, but that would be an inconvenience and a security risk.

- Hand pose estimation using IR tracking — Again, we have to move into the field of projection to recognize gestures.

- Using the accelerometer and gyroscope sensors — These sensors return accelerometer and gyroscope values along x, y, and z axes recorded during the movements of the hand. Embedding these sensors in a wearable device simplifies gesture recognition.

This notebook uses the accelerometer and gyroscope sensors. Gestures are mapped to the corresponding sensor values recorded during motion. Here, we have accelerometer and gyroscope values along x, y, z axes recorded 100 times for one gesture, i.e, 600 data points for one gesture.

Link to the notebook on cAInvas — here

Link to the Github repo — here

TinyML – Data collection

Since there was no Arduino device available at hand, the sensors in our smartphones were used.

Check out the app used to record the gestures here

This app returns a text file containing n lines, each with 601 values (sensors + gesture name). These values were then consolidated using the CSV library.

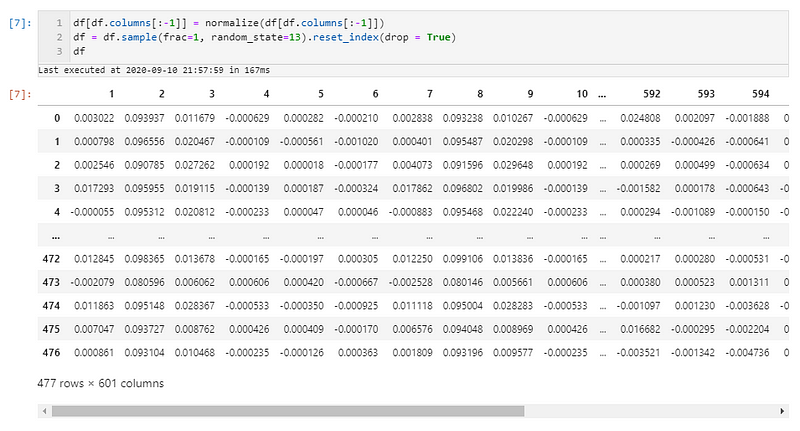

The dataset was normalized and then shuffled.

Around 60 instances each was recorded for 8 gestures — down_to_up, forward_clockwise, left_fall, up_clockwise, up_anticlockwise, left_to_right, right_to_left, forward_fall.

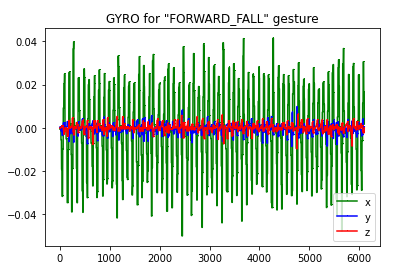

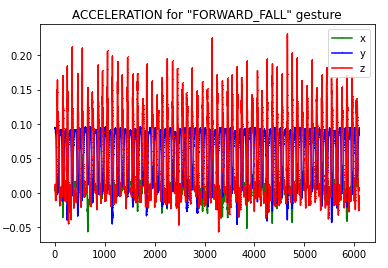

Visualization

The data from the two sensors were plotted separately. We can visualize the similarity in the plots and pick outliers if any.

Here are the sensor plots for the gesture ‘forward_fall’ along the three axes.

The model has 4 layers with relu activation and one with softmax activation.

We were able to achieve 95.8% accuracy on the test set.

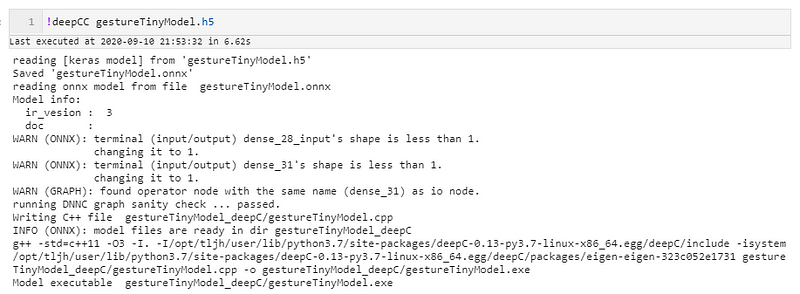

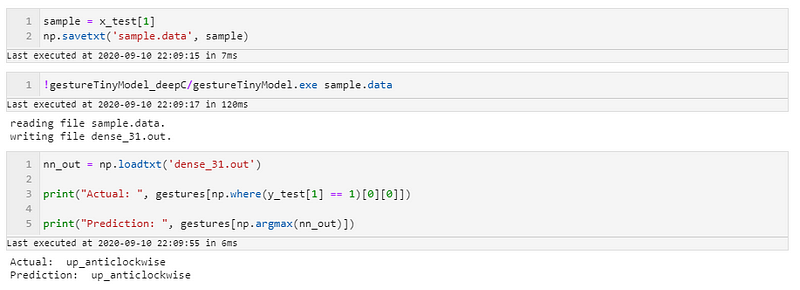

deepC

deepC is a deep learning compiler and inference framework to perform deep learning in edge devices like raspberry pi, Arduino, etc. It uses the ONNX front end and LLVM compiler toolchain in the backend.

TinyML – Home automation application

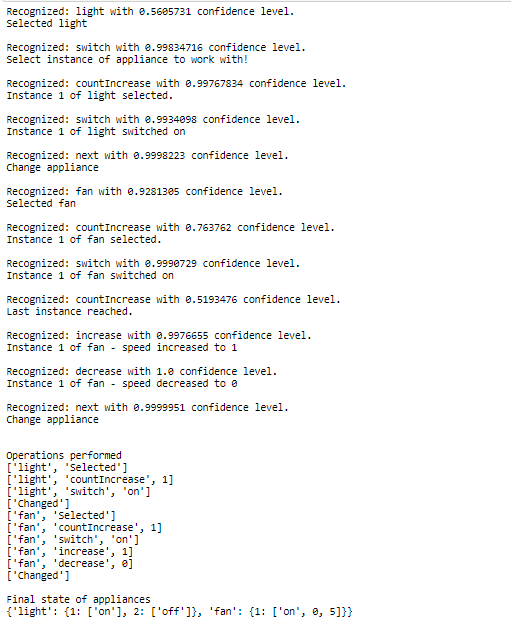

The below snippet is a POC for gesture-based home automation — controlling the various appliances at home with gestures.

The aim is to embed the sensors in a wearable device.

We move our hands in so many different ways and there is a high chance that one of these many movements map to the recorded gestures and trigger responses. To counter this, we can include a tiny button/other mechanisms to indicate the start of the gesture.

The code for the logic implementation is part of the cainvas notebook.

Here is the sample output —

Other applications

- Sign language convertor — Embedded in a wearable, this allows the gestures performed to be mapped to voice output.

- Gaming — Move your hands around for a more real-life experience.

- Music Generation

Written by: Ayisha D and Dheeraj Perumandla.

Also Read: Abalone age prediction — on cAInvas